Increase website traffic with Programmatic SEO

How does TripAdvisor have thousands of pages like "Top 10 things to do in each country"? Today we will build similar pages at scale for MyAlice!

It’s getting increasingly costly to drive your ICP (Ideal Customer Profile) to your website. Programmatic SEO gives you a relatively easier way to create pages/blogs at scale that helps you to generate more traffic over time. So, how can you start utilising Programmatic SEO to your advantage? In MyAlice, we ran an experiment and spun off 1500+ pages in a week.

What is programmatic SEO?

Programmatic SEO is a method that addresses the growing amount of search traffic by publishing landing pages on a large scale.

When planning your trip, you might have searched with “top things to do in <city>” and found blogs from TripAdvisor or other travel sites. Those sites have blogs for almost all the cities in the world with a different combination (like best fun things to do, top adventures, best bars and whatnot). And usually, they create these blogs for Programmatic SEO.

Pre-requisites:

A website built with a CMS (we used Webflow because it’s simple and they have API for their CMS)

Keyword Research for your ICP (to finalise the topics you want to cover)

Data sources regarding those topics

Let’s move into details now.

Step 1 - Finalising topics with head terms and modifiers

The first things you will need to do are:

Getting search intents of your ICP

Selecting head terms for Programmatic SEO

Selecting modifiers

Bringing these together

For example, in MyAlice, our ICP is the relatively new (who started their business during the COVID-19) store owners (more specifically the Shopify & WooCommerce store owners). We found a few search intents that have a high ranking but low competition i.e. Top Shopify/WooCommerce Agencies in Pakistan, or Best Shopify/WooCommerce themes for a Fashion site. So our head terms were Shopify Agencies / Shopify themes, whereas modifiers were countries/industries.

Now, all we have to do is to write blogs for all the Shopify agencies for each country. Looks like a couple of months of work!

Step 2 - Collecting the data sources

Now we need to collect data on each Shopify and WooCommerce Agencies (or at least the top ones). For these, we got a couple of reliable websites.

This website has a list of Shopify Plus partners.

This website has a list of a couple of Wordpress Agencies.

This website has a list of top WooCommerce Agencies.

Once we have the data sources, we will need to collect data from these sources. Now this part is technical. And it also depends on the site structure, API calls as well (I will skip the details of this process).

Overall, you should use-

Python BeautifulSoup package if the site content is generated server side.

Python requests package if the site content is generated client side.

import requests

from bs4 import BeautifulSoup

# this function loops through all the Shopify Partner page and collect Agency details

def get_list_of_agencies():

for x in range(18):

URL = f'https://www.shopify.com/plus/partners/service?page={x}'

page = requests.get(URL)

soup = BeautifulSoup(page.content, "html.parser")

agency = soup.find_all("div", class_="grid__item grid__item--mobile-up-7 grid__item--desktop-up-5 postcard-wrapper")

for i in agency:

link = i.find("a", class_="postcard postcard--link postcard--theme-white").get("href")

agency_url = "https://www.shopify.com" + link

get_agency_details(agency_url)

# this function get the particular agency details that we want to collect

def get_agency_details(agency_url):

agency_page = requests.get(agency_url)

x = BeautifulSoup(agency_page.content, "html.parser")

header = x.find("header", class_="grid-container profile-header")

logo = header.find("img", class_="js-profile-logo").get("src")

name = header.find("h1", class_="profile-heading").text.strip()

loc = header.find_all("span", class_="profile-intro__detail")[0].text.strip()

country = [loc.split(",")[-1].strip()]

website = header.find_all("span", class_="profile-intro__detail")[2].text.strip()

meta = x.find("div", class_="profile-meta")

desc = x.find("div", class_="profile-description").find_all("div", class_="profile-division")

service, industry = [], []

s = meta.find_all("div", class_="profile-division")[0].find_all("li")

for j in s:

service.append(j.find("a").text.strip())

description = desc[0].find("p").text.strip()

other_country, ind = None, None

if len(desc) > 2:

other_country = desc[2].find_all("li")

ind = desc[1].find_all("li")

if len(desc) == 2:

if desc[1].find("h2").text.strip() == "Other locations":

other_country = desc[1].find_all("li")

else:

ind = desc[1].find_all("li")

if ind:

for j in ind:

industry.append(j.find("a").text.strip())

if other_country:

for j in other_country:

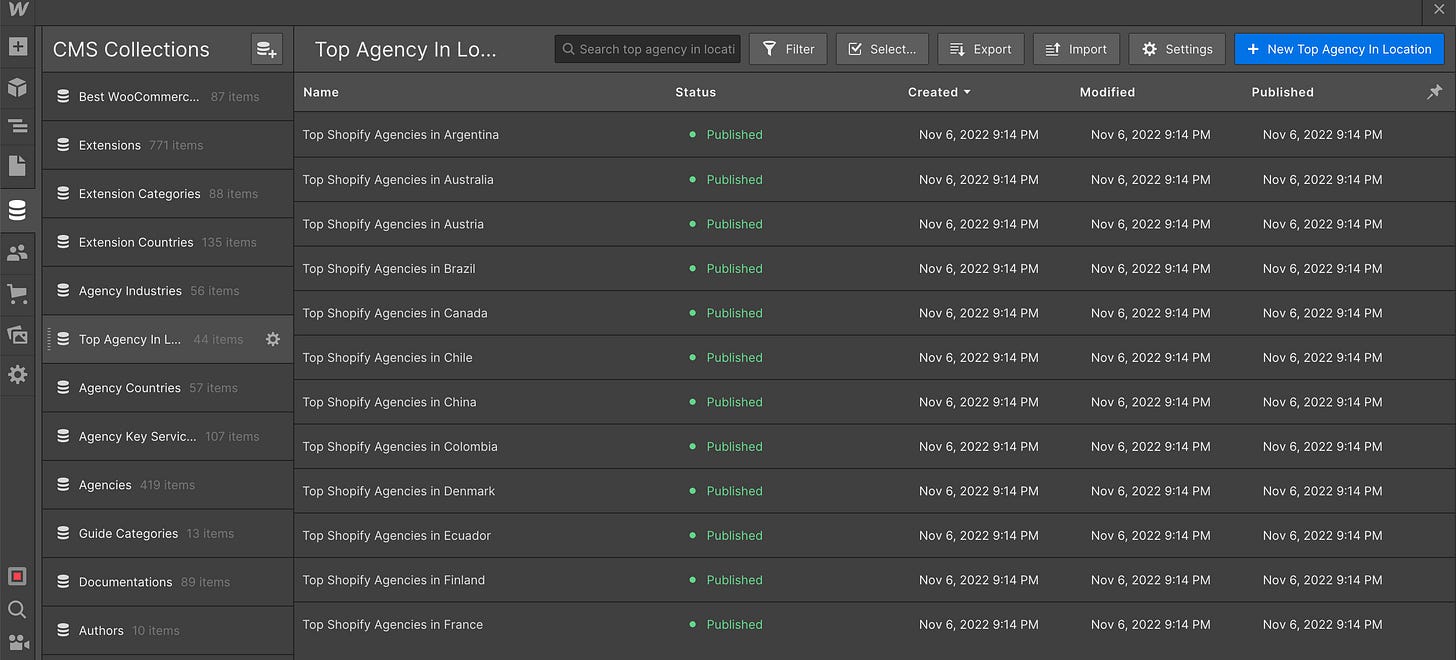

country.append(j.text.split(",")[-1].strip())Now that we have the data available, we will have to create relevant databases (or collections) in WebFlow. For this particular use case, we created the following collections:

Collection for Country

Collection for Industry

Collection for Agency

Collection for Top Agency based on Country

To link collections between themselves, we used WebFlow Multi-Reference Field. For example, each agency might be available in multiple countries. So Country has to be a linked multi-reference field in the Agency Collection.

The final blogs are based on “Collection for Top Agency based on Country”. Typically in this collection, one should have a title (the blog name), description and the multi reference field link to the Agency Collection.

Now that we have the structure in place, we need to insert all the data into the WebFlow Collections. Fortunately, WebFlow comes with API that can be used to populate data in the Collection.

# this function is used to send data to WebFlow

def send_data_to_webflow_collecction(url, fields):

response = requests.post(

url=url,

headers={

"Accept-Version": "1.0.0",

"Authorization": TOKEN,

"content-type": "application/json"

},

json={

"fields": fields

}

)

r = response.json()

if response.status_code == 200:

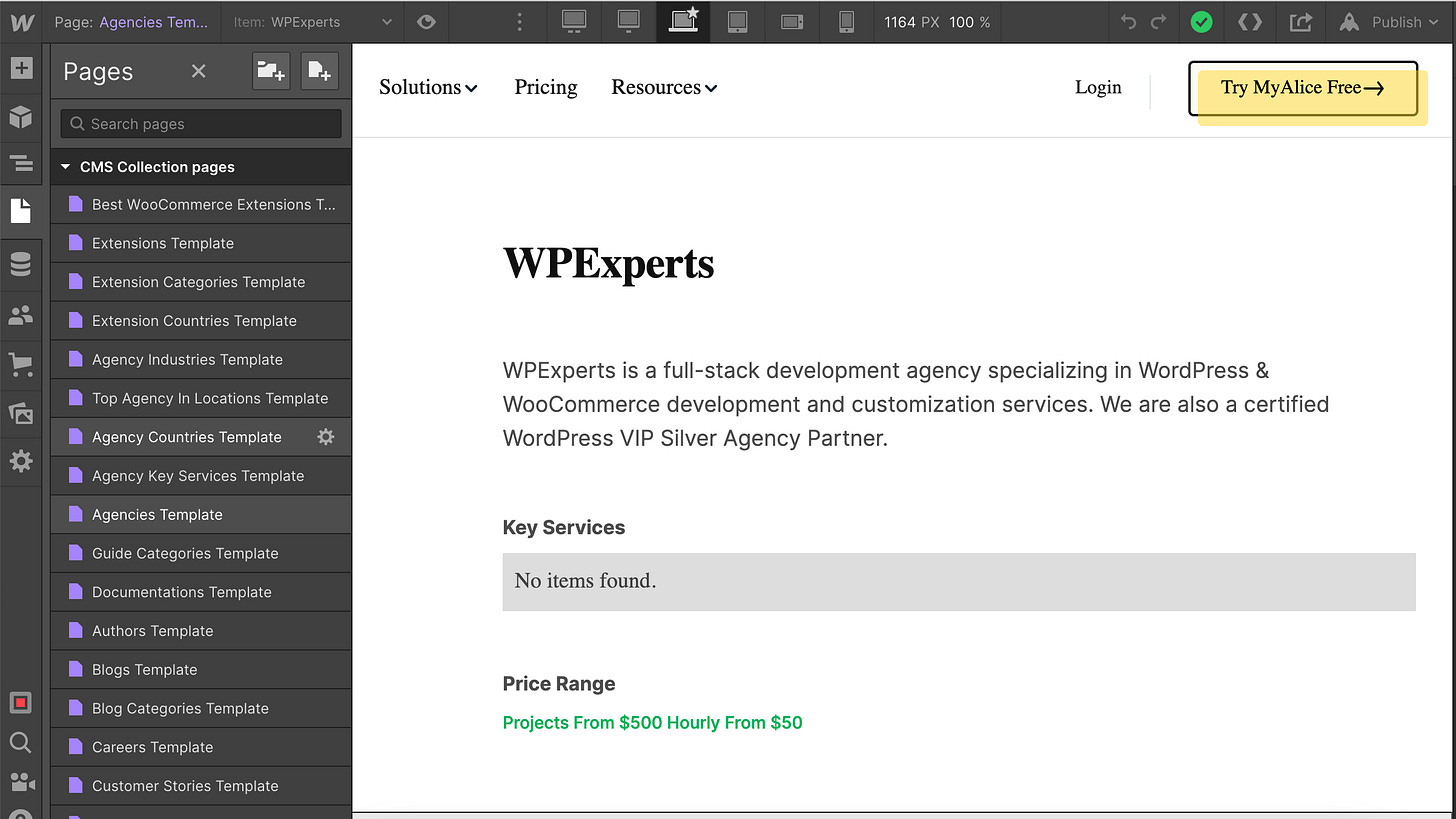

return r.get("_id")Step 3 - Creating Page Template in WebFlow

Well, the main complexities are taken care of now. Its time to create the template page and connect that page to the WebFlow Collection that we just created.

WebFlow automatically creates a template page for each collection, you will just need to design the page in WebFlow and voila, you now have hundreds of pages live in a couple of hours (or days 👀).

Hope this helped. If you need any details (technical or non-technical) feel free to reach out to me.